Tensorflow 代码实现

def create_content_loss(model, content_image): content_layer_names = ['conv3_1/conv3_1'] # we use the toppest layer for content loss layers = model.get_layer_tensors(content_layer_names) content_dict = model.create_feed_dict(image=content_image) content_values = session.run(layers, feed_dict= content_dict) content_values = tf.constant(value) stylized_values = layers #laybers will be evaluated during runtime with model.graph.as_default(): layer_losses = [] for v1, v2 in zip(content_values, stylized_values): loss = mean_squared_error(v1, v2) layer_losses.append(loss) total_loss = tf.reduce_mean(layer_losses) return total_loss 计算风格偏差比较两个图片的风格偏差会稍微复杂一点。如何用数学的式子体现两个内容完全不同的图片,风格一样呢?光光比较他们的特征是不够的,因为一个图里有桥,另一个图里可能没有。因此我们要比较两个图的特征互相之间的关系。这简直是一个天才的想法!我们用 Gram Matrix 来描述多个特征相互之间的关系,然后来比较两个图的 Gram Matrix 的距离来衡量风格的相似程度。如果 Gram Matrix 相近,即特征相互之间的关系相近,那也就说明风格相近。与内容偏差不同,风格偏差与宏观微观的都有关系,所以我们又算出每一层的风格偏差,再求一个加权平均就好了。

Tensorflow 代码实现

def gram_matrix(tensor): #gram matrix is just a matrix multiply it's transpose shape = tensor.get_shape() num_channels = int(shape[3]) matrix = tf.reshape(tensor, shape=[-1, num_channels]) gram = tf.matmul(tf.transpose(matrix), matrix) return gram def create_content_loss(model, content_image): #we use all 13 conv layers in VGG16 to compute loss function content_layer_names = ['conv1_1/conv1_1', 'conv1_2/conv1_2', 'conv2_1/conv2_1', 'conv2_2/conv2_2', 'conv3_1/conv3_1', 'conv3_2/conv3_2', 'conv3_3/conv3_3', 'conv4_1/conv4_1', 'conv4_2/conv4_2', 'conv4_3/conv4_3', 'conv5_1/conv5_1', 'conv5_2/conv5_2', 'conv5_3/conv5_3'] layers = model.get_layer_tensors(content_layer_names) gram_layers =gram_matrix(layers) style_dict = model.create_feed_dict(image=style_image) style_values = session.run(gram_layers, feed_dict= style_dict) style_values = tf.constant(style_values) stylized_values = gram_layers #gram_layers will be evaluated during runtime with model.graph.as_default(): layer_losses = [] for v1, v2 in zip(stylized_values, style_values): loss = mean_squared_error(v1, v2) layer_losses.append(loss) total_loss = tf.reduce_mean(layer_losses) return total_loss 如何最小化?对于一个标准的优化问题,梯度下降(Gradient Descent) 一定是可以用的。我们只需要把三张图片全部输入网络,计算出对应的内容偏差(用最后一层)和风格偏差(用每一层),加权平均一下以后获得总偏差,再相对于合成图片求出梯度,用梯度去更新这个图片就可以了。

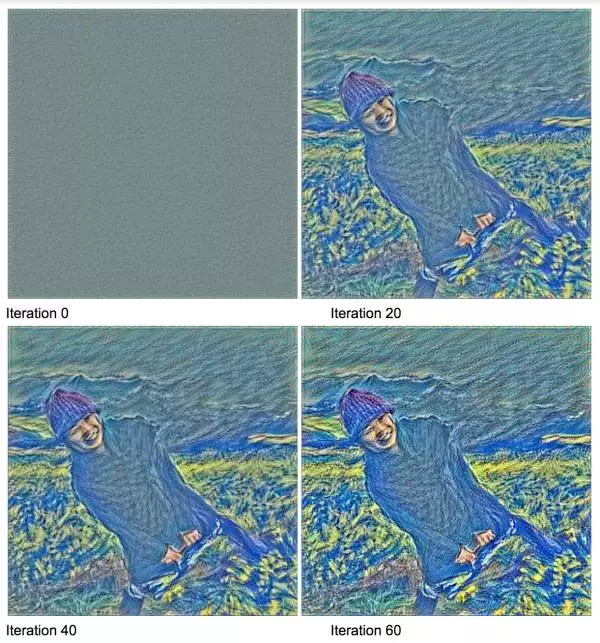

def style_transfer(content_image, style_image, weight_content=1.5, weight_style=10.0, num_iterations=120, step_size=10.0): model = vgg16.VGG16() session = tf.InteractiveSession(graph=model.graph) loss_content = create_content_loss(content_image, model) loss_style = create_style_loss(style_image, model) loss_combined = weight_content * loss_content + \ weight_style * loss_style gradient_op = tf.gradients(loss_combined, model.input) # The mixed-image is initialized with random noise. # It is the same size as the content-image. #where we first init it mixed_image = np.random.rand(*content_image.shape) + 128 for i in range(num_iterations): # Create a feed-dict with the mixed-image. mixed_dict = model.create_feed_dict(image= mixed_image) #compute the gradient with predefined gradient_op grad = session.run(gradient_op, feed_dict= mixed_dict) grad = np.squeeze(grad) step_size_scaled = step_size / (np.std(grad) + 1e-8) #update the mixed_image mixed_image -= grad * step_size_scaled mixed_image = np.clip(mixed_image, 0.0, 255.0) session.close() return mixed_image 效果如何?除了稍微慢了一点,效果还是棒棒哒!代码已经提多了,直接上图!

内容偏差的权重较大时

风格偏差的权重较大时

Gatyes后续有发表了一些相关的论文,例如下面这个可以保存内容图片的颜色,只在亮度通道中进行风格转化

Gatys et al, “Preserving Color in Neural Artistic Style Transfer”, arXiv 2016

Ruder在视频上进行风格转化,解决了针对不同像素,风格转化不连续的问题

Ruder et al, “Artistic style transfer for videos”, arXiv 2016

在特征空间中使用局部匹配来计算风格偏差,效果比使用 Gram Matrix 更好

Li and Wand, “Combining Markov Random Fields and Convolutional Neural Networks for Image Synthesis”, CVPR 2016

对于高分辨率图片的快速风格转化

Johnson et al, “Perceptual Losses for Real-Time Style Transfer and Super-Resolution”, ECCV 2016

使用同一个网络同时进行多种风格的转化

Dumoulin et al, “A Learned Representation for Artistic Style”, arXiv 2016

这个话题现在很火,大家去读个几篇,可能就能有自己的想法了!和女神 Kristen 合作论文也指日可待了!

参考资料[1] Gatys et al, “Image Style Transfer using Convolutional Neural Networks”, CVPR 2016

[2] Stanford CS 20SI: Tensorflow for Deep Learning Research

[3] Convolutional neural networks for artistic style transfer by Harish Narayanan

[4] How to Do Style Transfer with Tensorflow by Siraj Raval

深度学习,是诸如Prisma的图像应用、人工智能、无人驾驶、机器自动翻译、人脸识别背后的核心技术。如果说要预测未来5年最抢手、热门的技术人才,掌握深度学习的程序员、工程师,也一定会位于榜单前端。

Udacity 联手 Youtube 技术网红色拉杰(Siraj Raval),全球同步推出的 深度学习基石纳米学位 一经推出就广受好评。没有跟上第一,二轮班次?没关系,第三期报名机会现已展开!

转载请注明出处。

相关文章

相关文章 精彩导读

精彩导读 热门资讯

热门资讯 关注我们

关注我们